Facial recognition and biometric data in CCTV analytics raise urgent GDPR compliance and privacy controls concerns, forcing us to ask who is truly watching.

Modern surveillance has transformed beyond simple recording. Facial recognition systems now extract biometric data through CCTV analytics, creating identity maps that demand strict GDPR compliance and comprehensive privacy controls. These technologies turn ordinary cameras into sophisticated tracking networks, raising fundamental questions about personal autonomy and digital rights. You’re not just being recorded anymore. You’re being identified, analyzed, and catalogued.

The New Age of Watching: How Surveillance Became Personal

Traditional cameras simply captured footage. They didn’t know who you were. That era has ended. Today’s systems actively identify individuals through advanced algorithmic processing. This shift fundamentally changes the relationship between observers and the observed. Surveillance no longer provides anonymous protection—it creates permanent digital identities.

The technology works through complex mathematical modeling of biometric data. Cameras capture your image as raw biometric data. Processors map distinctive facial geometry from this biometric data. Algorithms compare these biometric data patterns against databases containing stored biometric data. The entire process happens in milliseconds as biometric data flows through processing pipelines. You walk past a camera. The system knows your identity before you’ve taken another step, with your biometric data permanently logged.

The Shift From Cameras to Facial Recognition Systems

Here’s the thing: early CCTV systems were passive compared to modern facial recognition. They recorded everything indiscriminately before facial recognition existed. Operators had to manually review footage to find specific individuals without facial recognition capabilities. This labor-intensive process limited surveillance capabilities until facial recognition changed everything. Modern facial recognition changed everything. Cameras became active participants in identification through facial recognition.

The transformation started with edge-based processing. Cameras gained onboard computing power. They don’t just send raw video anymore. Instead, they analyze images locally. This distributed intelligence enables real-time identification across vast networks. A single person can now monitor thousands of cameras simultaneously.

Deep learning models drive these capabilities. Neural networks train on millions of faces. They learn to distinguish between individuals with remarkable accuracy. The systems identify people despite varying lighting conditions, different angles, or partial obstructions. Glasses don’t fool them. Neither do hats or facial hair.

Why Facial Recognition Feels More Like Being Observed Than Recorded

Traditional recording felt impersonal. Nobody watched the footage unless something happened. Facial recognition creates constant awareness. The system knows you’re there. It tracks your movements. It remembers your patterns. This persistent identification triggers psychological responses that simple recording never did.

You can’t blend into crowds anymore. Each appearance gets logged. Your movement patterns become data points. The system builds behavioral profiles whether you consent or not. This continuous tracking transforms public spaces into digital fishbowls. Privacy becomes impossible even in theoretically public areas.

The permanence amplifies these concerns. Recorded footage eventually gets overwritten. Facial recognition data persists indefinitely. Your biometric signature becomes a lifetime identifier. Once captured, it exists forever. There’s no taking it back.

How CCTV Analytics Turn Everyday Spaces Into Identity Maps

Shopping malls become tracking grids. Each camera becomes a checkpoint. The system logs every appearance. It knows which stores you visit. How long you stayed. Whether you purchased anything. These granular movement traces reveal intimate details about your life.

Office buildings track employee movements with surgical precision. Entry and exit times get recorded automatically. Break patterns emerge from data analysis. The system knows who talks to whom. Meeting attendance becomes automatically verified. Productivity metrics derive from movement data.

Public spaces offer no escape. Streets, parks, transit stations—all feature networked cameras. These systems create citywide surveillance networks. Law enforcement accesses this infrastructure for investigations. Private companies sell access to whoever pays. Your daily routines become commodified information.

Facial Recognition as the Core of Modern Surveillance Power

The technology represents unprecedented identification capabilities. It operates at scale. Processing happens in microseconds. Accuracy continues improving. Error rates drop with each algorithmic iteration. This creates surveillance infrastructure that would have seemed impossible a decade ago.

Integration with other systems multiplies its power. Facial recognition links to access control databases. It connects with criminal record systems. Social media provides additional training data. These interconnected networks build comprehensive digital profiles. Your face becomes the key to vast information repositories.

Facial Recognition in Public Streets and Private Spaces

Public deployment raises immediate concerns about facial recognition. Cities install cameras throughout urban environments for facial recognition. Transit systems implement automated fare verification using facial recognition. Retailers track customer movements through stores with facial recognition. Each facial recognition deployment expands the surveillance perimeter.

Private spaces present different challenges. Apartment buildings use facial recognition for access. Office complexes replace keycards with biometric verification. Gyms and clubs implement member identification. The technology permeates every aspect of daily life.

The boundary between public and private blurs. Cameras monitor sidewalks outside homes. Doorbell cameras capture passersby. Aggregated footage from private devices creates de facto public surveillance. Nobody consented to this distributed tracking network.

How Facial Recognition Tracks Us Without Our Awareness

You don’t see the identification happening. Cameras blend into architecture. Processing occurs on remote servers. Alerts trigger silently. The entire system operates invisibly. You have no way of knowing when you’re being identified.

Covert deployment amplifies these issues. Some cameras hide in everyday objects. Others look like security devices but include facial recognition. Organizations rarely announce these capabilities. The lack of transparency makes informed consent impossible.

Even disclosed systems escape notice. People become desensitized to cameras. Warning signs get ignored. Habituationreplaces vigilance. You stop thinking about surveillance. The systems continue operating regardless.

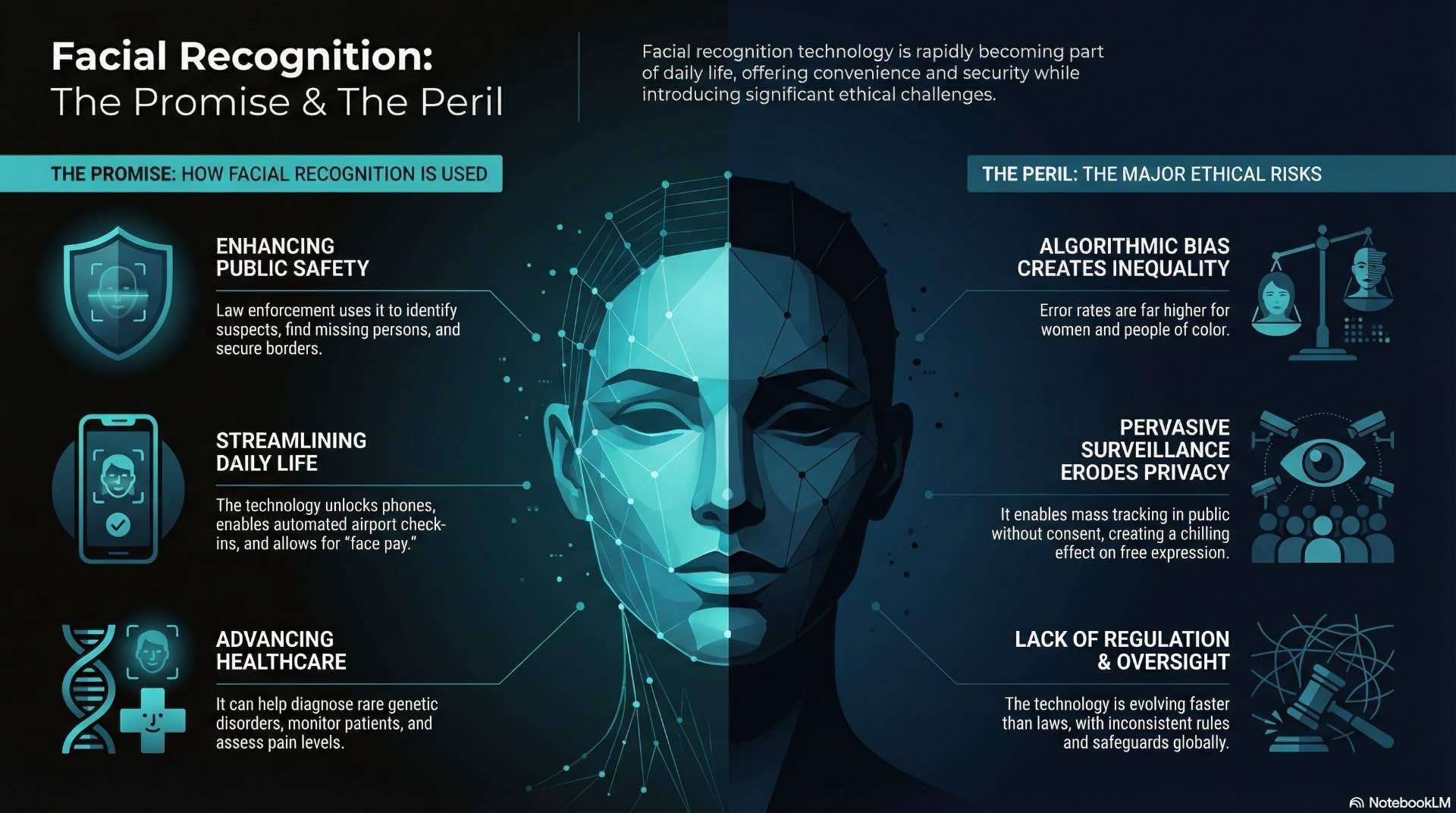

The Unseen Risks When Facial Recognition Gets It Wrong

False positives create serious consequences. The system matches you to someone else. You get stopped by security. Police question you. Your movements get flagged for investigation. These misidentification events disrupt lives and damage reputations.

Accuracy varies dramatically across demographics. Systems perform poorly on certain ethnicities. Women get misidentified more than men. Age affects recognition rates. These algorithmic biases create discriminatory outcomes. Some people face disproportionate surveillance simply because the technology works poorly on their faces.

Technical failures compound these problems. Lighting affects accuracy. Camera angles matter. Image quality determines reliability. Environmental variables create unpredictable error rates. Organizations deploy these systems anyway, accepting collateral damage from false matches.

What Counts as Biometric Data Under GDPR

European law defines biometric data precisely. It includes any physiological characteristics processed for identification. Facial geometry qualifies automatically. So do iris patterns, fingerprints, and voiceprints. The definition encompasses more than most people realize.

Processing requirements are strict for GDPR compliance. Organizations must establish legal justification meeting GDPR compliance standards. Consent alone rarely suffices for GDPR compliance. Public interest claims face intense scrutiny under GDPR compliance. Commercial applications require careful legal analysis ensuring GDPR compliance. The bar for legitimate processing sits extremely high for GDPR compliance. Effective privacy controls become mandatory alongside GDPR compliance efforts.

Facial Recognition vs Other Biometric Identifiers

Faces present unique challenges. You can’t change your facial structure. Hiding it in public draws attention. Involuntary collection happens constantly. This differs from fingerprints, which require active participation. Facial recognition operates without cooperation.

The technology captures at a distance. Cameras identify people from dozens of meters away. Subjects don’t need to approach readers. They don’t touch anything. Simply being visible suffices for identification. This remote capture capability eliminates traditional consent mechanisms.

Permanence distinguishes faces from other identifiers. Fingerprints can be obscured. Voices can be disguised. Faces remain constantly exposed. You can’t hide them during normal daily activities. This unavoidable exposure makes facial recognition particularly invasive.

Behavioural Patterns and Gait as Covert Biometric Data

Gait analysis extracts identity from walking patterns. Everyone moves distinctively. Stride length, arm swing, posture—these create unique signatures. Cameras capture these patterns from angles that hide faces. The analysis happens without facial recognition at all.

This extends biometric collection beyond obvious identifiers. You might cover your face. The system identifies you anyway through movement characteristics. Behavioral biometrics operate as supplementary tracking methods. They fill gaps left by traditional facial recognition.

GDPR considers these identifiers as biometric data. The same protections apply. Organizations can’t collect gait patterns without justification. Processing restrictions extend to all uniquely identifying characteristics. The law recognizes that identification doesn’t require faces.

How CCTV Analytics Extract Identity From Ordinary Footage

Modern systems don’t just record—they analyze. Object detection algorithms identify people in frames. Tracking algorithms follow individuals across multiple cameras. Metadata extraction creates searchable records. The raw footage becomes an indexed database of movements and identities.

Facial recognition integrates into these pipelines. The system detects a person. It extracts facial features. Algorithms match against known individuals. Results get tagged to the video stream. This automated enrichment transforms passive recording into active identification.

Storage systems preserve both video and extracted data. You can search by identity rather than reviewing footage manually. Query capabilities rival traditional databases. Law enforcement can find every appearance of a specific person. Companies track customer visits automatically. The analytical power far exceeds simple recording.

GDPR’s Strictest Rules for Facial Recognition

European regulations treat facial data as special-category information requiring strict GDPR compliance. This designation triggers the highest protection levels for biometric data. Organizations face stringent compliance requirements including mandatory privacy controls for all CCTV analytics deployments. Violations carry substantial penalties under GDPR compliance frameworks. The regulatory framework aims to prevent surveillance overreach through comprehensive privacy controls that govern how CCTV analytics process biometric data.

Processing must meet narrow legal criteria. Public interest requires clear demonstration. Commercial applications face particularly high bars. Organizations can’t simply collect faces because the technology exists. They need documented justification that survives regulatory scrutiny.

Why Facial Recognition Is Classified as Special-Category Data

The classification reflects inherent sensitivity of biometric data. Facial data reveals ethnic origin automatically when CCTV analytics process biometric data. It can’t be separated from protected characteristics requiring GDPR compliance. This makes all facial recognition processing of biometric data inherently sensitive and subject to strict privacy controls. Standard data protection rules don’t provide adequate safeguards for biometric data extracted through CCTV analytics without comprehensive GDPR compliance measures and enhanced privacy controls.

Abuse potential drives the classification of biometric data under GDPR compliance. Faces enable comprehensive surveillance through CCTV analytics that capture biometric data continuously. Historical examples demonstrate totalitarian applications of biometric data extracted via CCTV analytics. Democratic societies recognize these risks to biometric data security. Special-category designation provides enhanced protections against misuse through mandatory privacy controls, strict GDPR compliance requirements, and limitations on CCTV analytics processing of biometric data.

The permanence matters for biometric data protection and GDPR compliance. You can change passwords but biometric data remains unchanged. You can’t change your face once biometric data gets extracted by CCTV analytics. Once compromised, facial biometric data remains vulnerable forever, making privacy controls essential. This irreversible exposure of biometric data justifies exceptional protection requirements under GDPR compliance frameworks governing CCTV analytics and privacy controls for biometric data.

Legal Boundaries Around Facial Recognition in Europe

Legitimate interest claims rarely succeed for processing biometric data under GDPR compliance. Courts apply strict scrutiny to such justifications when CCTV analytics extract biometric data. Organizations must prove necessity beyond convenience for privacy controls. Alternative approaches must be unavailable or unreasonable before deploying CCTV analytics to process biometric data. The threshold remains deliberately high for GDPR compliance when implementing privacy controls for biometric data from CCTV analytics.

Consent provides limited authorization for processing biometric data under GDPR compliance. It must be freely given and specific regarding CCTV analytics and privacy controls. Employees can’t meaningfully consent to workplace surveillance that captures biometric data through CCTV analytics. Power imbalances invalidate consent in many contexts involving biometric data. Voluntary agreement rarely exists in hierarchical relationships where privacy controls for CCTV analytics processing biometric data require GDPR compliance.

Public authority use requires statutory authorization. Agencies can’t deploy facial recognition on their own initiative. Legislative approval becomes mandatory. This prevents administrative overreach. Democratic accountability becomes essential.

The DPIA Requirement for Facial Recognition Deployments

Data Protection Impact Assessments become mandatory for CCTV analytics processing biometric data. Organizations must evaluate risks before deployment to ensure GDPR compliance and adequate privacy controls. This includes technical vulnerabilities and social implications of biometric data processing through CCTV analytics. Mitigation strategies must address identified concerns for privacy controls. Regulators review these assessments for adequacy of GDPR compliance measures protecting biometric data collected via CCTV analytics.

The process forces systematic risk analysis of how CCTV analytics handle biometric data for GDPR compliance. Organizations can’t ignore potential harms to biometric data privacy. They must document decision-making processes for privacy controls. Accountability mechanisms create audit trails showing GDPR compliance for biometric data in CCTV analytics systems. This transparency enables regulatory oversight.

DPIAs must be updated regularly. Technology changes require reassessment. New use cases trigger fresh reviews. The requirement creates ongoing compliance obligations. Organizations can’t perform a single assessment and forget about it.

The Dark Side of AI: Accuracy, Bias, and Misidentification

Algorithmic systems inherit human prejudices. Training data reflects societal inequalities. Machine learning modelsamplify these biases. The result: systematically discriminatory outcomes. Some demographics face excessive false positives. Others escape identification entirely.

Technical limitations create additional problems. Lighting affects accuracy significantly. Camera angles matter enormously. Image resolution determines reliability. These environmental dependencies create unpredictable performance. Real-world conditions rarely match controlled testing environments.

Facial Recognition Errors and Their Real-World Consequences

Misidentification leads to wrongful arrests. People get detained based on algorithmic mistakes. Innocent individuals face interrogation. Criminal charges sometimes follow. The consequences extend beyond inconvenience into life-altering territory.

Financial harm results from errors. Banks deny service based on false matches to watchlists. Employment opportunities disappear. Housing applications get rejected. Automated decision-making provides no recourse or explanation.

Social stigma accompanies false accusations. Reputations suffer even after errors get corrected. Digital records persist indefinitely. Search results preserve outdated associations. The permanence of digital information extends harm beyond the initial incident.

When CCTV Analytics Reinforce Bias or Create New Ones

Training data determines algorithmic behavior of CCTV analytics processing biometric data. Underrepresented groups appear less frequently in datasets used for biometric data analysis. Models learn to identify them poorly, creating GDPR compliance challenges. This creates systematic disadvantage for minority populations in CCTV analytics using biometric data without adequate privacy controls.

Deployment patterns amplify inequality in how CCTV analytics capture biometric data. Surveillance concentrates in specific neighborhoods where privacy controls may be weaker. These areas face disproportionate monitoringthrough CCTV analytics collecting biometric data. Residents experience heightened scrutiny regardless of individual behavior, raising GDPR compliance concerns. Geographic discrimination becomes technologically enforced through CCTV analytics without adequate privacy controls for biometric data.

Alert thresholds create differential treatment. Systems flag certain demographics more readily. Confirmation biasreinforces operator suspicions. The feedback loop strengthens discriminatory patterns. Technology automates and scales human prejudice.

Can AI Be Trusted With Sensitive Biometric Data?

Current systems lack transparent decision-making. Neural networks operate as black boxes. Developers can’t fully explain specific identifications. This opacity prevents meaningful accountability. You can’t challenge decisions you don’t understand.

Security vulnerabilities persist throughout the pipeline processing biometric data via CCTV analytics. Cameras can be spoofed, compromising biometric data collection. Networks get intercepted, exposing biometric data in transit. Databases suffer breaches, leaking stored biometric data without proper GDPR compliance. Each weak point creates exploitation opportunities threatening privacy controls. Attackers target biometric data systems constantly because CCTV analytics represent valuable intelligence assets.

Model drift degrades performance over time. Accuracy decreases without constant retraining. Organizations deploy systems then neglect maintenance. Performance degradation happens silently. Users never know reliability has declined.

How Far Can They Go? Surveillance Overreach and Abuse Risks

Legal limits exist on paper. Enforcement remains inconsistent. Organizations push boundaries constantly. Regulatory agencies lack resources for comprehensive oversight. Compliance gaps create de facto permission for questionable practices.

Technical capabilities exceed legal frameworks for CCTV analytics and biometric data. Regulations can’t keep pace with innovation in CCTV analytics processing biometric data. New identification methods emerge faster than legislative responses ensuring GDPR compliance. This governance lag creates prolonged periods of unregulated deployment where privacy controls for biometric data in CCTV analytics lag behind technological capabilities.

Scenarios Where Facial Recognition Crosses Ethical Lines

Workplace monitoring extends to break rooms and bathrooms. Employers track emotional states through facial analysis. Performance reviews incorporate behavioral data extracted from surveillance. These practices violate reasonable privacy expectations despite potential legality.

Retail tracking follows customers across multiple visits. Systems build purchase histories without disclosure. Prices adjust based on perceived wealth extracted from appearance analysis. This discriminatory pricing operates invisibly to consumers.

Political surveillance targets activists and protesters. Authorities identify demonstration participants automatically. This chilling effect suppresses democratic participation. People avoid exercising rights knowing identification becomes permanent.

Hidden Uses of CCTV Analytics in Behaviour Prediction

Systems claim to predict shoplifting through behavioral indicators. Algorithms flag suspicious movementspreemptively. Security confronts people before any crime occurs. These predictive interventions treat individuals as criminals based on statistical patterns.

Emotional analysis promises to detect hostile intent. Cameras assess facial expressions for threat indicators. The pseudoscientific foundations remain unproven. Organizations deploy these systems anyway, accepting high error ratesas acceptable costs.

Marketing applications analyze demographic characteristics automatically. Systems categorize viewers by perceived attributes. Advertisements target individuals based on algorithmic assessments. This automated profiling happens without consent or awareness.

Mission Creep: When Small Surveillance Expands Unnoticed

Pilot programs start with narrow scope. Organizations promise limited use cases. Success leads to gradual expansion. New applications emerge incrementally. Oversight mechanisms fail to track scope growth.

Access expands beyond original users. More departments gain query capabilities. External organizations request access. Data sharing becomes routine. The surveillance infrastructure supports uses never contemplated during deployment.

Retention periods extend indefinitely. Temporary storage becomes permanent archives. Technical capabilities enable decades of retention. Organizations exploit these capabilities despite original commitments to limited storage.

Facial Recognition in Edge vs Cloud Processing Models

Architectural choices determine data exposure. Edge processing keeps data local while CCTV analytics extract biometric data at the camera. Cloud models transmit everything off-site, creating GDPR compliance challenges. Each approach creates distinct risks requiring different privacy controls. Organizations must carefully evaluate security implications for GDPR compliance. Effective privacy controls depend on architectural choices, and CCTV analytics capabilities vary by deployment model.

Hybrid systems combine both approaches. Some processing happens locally. Complex analysis occurs in cloud infrastructure. This distributed architecture multiplies potential vulnerabilities. Each handoff point creates interception opportunities.

Data Exposure Risks in Cloud-Based Facial Recognition

Transmission creates interception windows for biometric data from CCTV analytics. Data crosses multiple networksbefore reaching processors, exposing biometric data to interception. Each hop represents a vulnerability threatening GDPR compliance. Encryption provides protection but introduces key management challenges for securing biometric data. Privacy controls must account for these transmission risks in CCTV analytics systems handling biometric data.

Cloud providers control infrastructure processing biometric data from CCTV analytics. Customers trust third-party security for biometric data protection and GDPR compliance. This dependency relationship transfers risk of biometric data breaches. Providers suffer breaches affecting biometric data from CCTV analytics. Customer data becomes exposed without adequate privacy controls. Liability questions remain unresolved regarding GDPR compliance when third parties process biometric data.

Jurisdictional issues complicate compliance. Data crosses international borders. Different legal regimes apply to storage and processing. Organizations lose direct control over information handling. Regulatory requirements may conflict.

On-Device Facial Recognition and Localised Threats

Edge processing reduces transmission risks. Data stays on local devices. This containment approach limits exposure. However, physical security becomes critical. Stolen devices compromise all stored data.

Local processing requires powerful hardware. This increases deployment costs. Maintenance complexity grows exponentially. Each device needs independent updates. Configuration drift creates security vulnerabilities.

Limited computing power constrains capabilities. Complex analysis remains impossible. Accuracy suffers compared to cloud systems. Organizations sacrifice performance for data localization. The tradeoff isn’t always acceptable.

Hybrid CCTV Analytics and Their Privacy Implications

Combined architectures promise optimal performance. Edge devices handle initial filtering. Cloud systems process flagged events. This reduces bandwidth requirements while preserving analytical power.

However, hybrid models complicate privacy assessment. Some data stays local. Other information gets transmitted. Tracking flows becomes difficult. Organizations struggle to maintain comprehensive documentation.

Failure modes multiply in hybrid systems. Either component can malfunction. Fallback behaviors may compromise privacy unexpectedly. Testing all scenarios becomes practically impossible. Unknown vulnerabilities persist.

Technical Protections for GDPR-Safe Sensitive Analytics

Compliance requires layered security protecting biometric data processed through CCTV analytics. Organizations must implement multiple safeguards ensuring GDPR compliance. Single protections prove insufficient for biometric data security. Defense-in-depth becomes mandatory for sensitive biometric processing through CCTV analytics requiring comprehensive privacy controls for GDPR compliance.

Privacy-by-design principles guide implementation of CCTV analytics handling biometric data. Security integrates from initial development to ensure GDPR compliance and effective privacy controls. Retrofit protection proves inadequate for biometric data in CCTV analytics. Organizations must build privacy into core architecture processing biometric data to achieve GDPR compliance through comprehensive privacy controls in all CCTV analytics systems.

Encryption Requirements for Facial Recognition Data

Data requires encryption at rest and in transit to protect biometric data in CCTV analytics for GDPR compliance. Storage systems must protect against physical theft of biometric data. Network transmission needs end-to-end encryption safeguarding biometric data from CCTV analytics. Unencrypted storage violates GDPR requirements for privacy controls protecting biometric data.

Key management presents significant challenges. Organizations must protect cryptographic keys as carefully as data itself. Key rotation becomes operationally complex. Recovery mechanisms must prevent data loss without compromising security.

Processing encryption remains technically difficult. Some analysis requires plaintext access. This creates temporary vulnerabilities during computation. Secure enclaves provide partial solutions but add complexity.

Access Controls and Logging for High-Risk CCTV Analytics

Role-based permissions restrict system access to biometric data from CCTV analytics. Only authorized personnel can query biometric data ensuring GDPR compliance. Authentication mechanisms verify user identity accessing privacy controls. Multi-factor verification becomes mandatory for sensitive operations involving biometric data processed through CCTV analytics.

Every access gets logged comprehensively. Audit trails record who accessed what information when. Tamper-resistant logging prevents evidence destruction. These records enable forensic investigation of potential misuse.

Regular access reviews identify anomalous patterns. Organizations must actively monitor usage. Automated alertsflag suspicious queries. This ongoing oversight prevents abuse before significant harm occurs.

Automated Redaction and Masking for Public Recording

Privacy filters obscure identifiable features automatically. Faces get blurred in real-time processing. Only flagged events preserve unredacted footage. This default obscuration minimizes exposure.

Selective revelation requires documented justification. Authorized users can access original footage for legitimate purposes. The system logs these de-anonymization events. Accountability mechanisms prevent casual access.

Effectiveness verification presents challenges. Systems must reliably detect and mask faces. Missed identificationscreate privacy breaches. Organizations must continuously test masking accuracy.

Privacy Controls in a World of Ever-Watching Systems

Transparency becomes essential for CCTV analytics processing biometric data. People deserve to know when biometric data gets collected for GDPR compliance. Clear signage must announce facial recognition zones with privacy controls. Detailed information about processing biometric data through CCTV analytics must be readily available to ensure GDPR compliance and effective privacy controls.

Control mechanisms empower individuals regarding their biometric data in CCTV analytics. People should access their own biometric data for GDPR compliance verification. Correction rights must function effectively for biometric data with proper privacy controls. Deletion requests need timely responses removing biometric data from CCTV analytics systems. These mechanisms make privacy rights more than abstract concepts for biometric data protection under GDPR compliance.

Transparency Notices for Facial Recognition Zones

Signage must be conspicuous and unambiguous to support privacy controls. Generic security camera warnings don’t suffice for privacy controls. Notices must specifically mention facial recognition and privacy controls. Plain languageexplains what data gets collected and which privacy controls apply. Effective privacy controls require clear communication about privacy controls in place.

Information must include controller identity. People need to know who’s responsible. Contact details enable rights exercise. Legal basis for processing should be stated clearly.

Multi-language notices serve diverse populations. Visual symbols aid comprehension. Accessibility requirementsextend to visually impaired individuals. Transparency means actual understanding, not mere disclosure.

Retention Rules and Deletion of Biometric Data

Organizations must define maximum retention periods for biometric data from CCTV analytics. Indefinite storage violates GDPR principles requiring privacy controls. Automated deletion should occur after specified intervals for biometric data. Manual intervention shouldn’t be required for GDPR compliance in deleting biometric data processed through CCTV analytics with proper privacy controls.

Purpose limitation restricts retention duration. Data can’t be kept “just in case.” Documented business needs justify retention. When purposes expire, data must disappear.

Verification mechanisms confirm deletion effectiveness. Organizations must prove data actually gets removed. Backup systems can’t bypass deletion obligations. Complete erasure becomes mandatory.

Accountability When Facial Recognition Is Misused

Organizations face direct liability for violations. Fines reach substantial amounts. Reputational damage extends beyond financial penalties. Public trust once lost proves difficult to rebuild.

Individual recourse must be accessible. Affected people deserve meaningful remedies. Compensation mechanismsaddress concrete harms. Systemic changes prevent recurrence.

Regulatory enforcement varies considerably. Some authorities act aggressively. Others lack resources or will. This inconsistent oversight creates compliance disparities across jurisdictions.

Governance and Compliance for High-Risk AI Surveillance

Organizational structures must support accountability for CCTV analytics processing biometric data. Data protection officers need genuine authority ensuring GDPR compliance. Privacy teams require adequate resources managing privacy controls for biometric data. Executive commitment can’t be merely performative regarding GDPR compliance for CCTV analytics handling biometric data with effective privacy controls.

Regular audits verify ongoing compliance for CCTV analytics processing biometric data. Internal reviews catch problems early in biometric data handling for GDPR compliance. External assessments provide independent validation of privacy controls. Continuous improvement becomes operational reality for CCTV analytics managing biometric data under GDPR compliance with effective privacy controls.

Controller and Processor Duties in Facial Recognition Systems

Controllers bear primary responsibility. They determine processing purposes. Legal obligations rest with them regardless of outsourcing arrangements. This accountability cannot be delegated.

Processors must follow controller instructions. However, they also have independent duties. Security obligationsapply to all parties. Notification requirements trigger when breaches occur.

Contractual arrangements must specify responsibilities clearly. Data processing agreements become mandatory. Liability allocation needs explicit definition. Ambiguity creates compliance risks.

Vendor Risks: When Analytics Providers Fail Compliance

Third-party failures expose organizations to liability regarding biometric data from CCTV analytics. Careful vendor selection becomes critical for GDPR compliance and privacy controls. Due diligence must assess security practices protecting biometric data. Ongoing monitoring tracks vendor compliance with GDPR requirements for CCTV analytics processing biometric data with adequate privacy controls.

Contractual protections provide limited insurance. Breaches create immediate customer liability. Vendor bankruptcy complicates data recovery. Organizations must plan for vendor failure.

Technology lock-in creates long-term risks. Migration difficulties trap organizations with problematic vendors. Exit strategies should exist before deployment. Vendor independence preserves options.

Oversight Mechanisms for Sensitive CCTV Analytics

Independent reviews build public confidence. Ethics committees evaluate questionable applications. Community input shapes deployment decisions. Democratic accountability extends beyond organizational boundaries.

Technical audits verify security claims. Penetration testing identifies vulnerabilities. Code reviews catch implementation flaws. These technical safeguards complement policy oversight.

Regular reporting maintains transparency. Public statistics reveal system usage patterns. Trend analysis detects mission creep. Sunlight remains the best disinfectant.

Rebuilding Trust in the Age of AI Surveillance

Current practices have eroded confidence in how CCTV analytics handle biometric data. People distrust surveillance systems lacking proper GDPR compliance. Organizations must earn back public trust through transparent privacy controls. This requires genuine commitment to biometric data protection in CCTV analytics ensuring GDPR compliance with comprehensive privacy controls.

Ethical frameworks should guide development. Human rights must take precedence over technical capabilities. Organizations need moral courage to refuse profitable but harmful applications.

How to Use Facial Recognition Without Violating Rights

Necessity tests must be rigorous. Alternatives should be exhausted first. Proportionality limits deployments to genuine needs. Least-invasive approaches become default choices.

Meaningful consent requires real alternatives. People shouldn’t sacrifice privacy for essential services. Opt-out options must be practical. No penalty should attach to privacy protection.

Purpose limitation restricts function creep. Systems deployed for security can’t expand into marketing. Documented purposes constrain future uses. Re-authorization becomes necessary for scope changes.

Ethical Guardrails for Identity-Based CCTV Analytics

Human oversight must remain central. Automated decisions need human review. Appeal mechanisms allow challenging outcomes. Accountability requires identifiable decision-makers.

Bias testing must be continuous. Demographic performance should be monitored actively. Corrective actionsaddress identified disparities. Fairness becomes measurable and enforced.

Transparency extends to algorithmic logic. Explainability enables meaningful review. Documentation supports accountability. Opacity should be rejected categorically.

Preparing for Stricter EU Regulations and Future Bans

AI Act provisions target high-risk applications. Facial recognition qualifies automatically. Conformity assessmentswill become mandatory. Regulatory requirements will intensify.

Some jurisdictions may ban certain uses entirely. Public space deployment faces particular scrutiny. Organizations should anticipate restrictions. Flexibility in architecture enables adaptation.

Proactive compliance beats reactive scrambling. Organizations investing in strong privacy practices now will adapt more easily. Cutting corners today creates future liability.

Conclusion: Who Watches Whom in the Era of Facial Recognition?

Technology has outpaced democratic deliberation. We face unprecedented identification capabilities without adequate safeguards. The balance between security and liberty requires urgent recalibration. Facial recognition transforms public spaces into surveillance grids where biometric data gets extracted continuously. Organizations deploying CCTV analytics must implement rigorous privacy controls and achieve strict GDPR compliance before deployment, not after problems emerge.

The Growing Gap Between Technology and Public Awareness

Most people don’t understand modern surveillance capabilities. They see cameras. They don’t grasp the analytical infrastructure behind them. This knowledge deficit prevents informed debate. Public education becomes essential for democratic decision-making.

Organizations exploit this ignorance. Opaque deployments avoid public scrutiny. Technical jargon obscures real implications. Informed consent proves impossible when people don’t understand what they’re consenting to.

Transparency initiatives must bridge this gap. Plain language explanations should be mandatory. Public demonstrations reveal actual capabilities. Democratic oversight requires educated citizens.

How GDPR Shapes the Future of AI Surveillance

European regulations establish global standards. Organizations serving EU markets must comply everywhere. This Brussels effect spreads privacy protections worldwide. Regulatory frameworks influence technical development.

Enforcement remains inconsistent but improving. Significant fines create compliance incentives. Public awarenessdrives regulatory attention. The framework evolves with technological advancement.

Ongoing dialogue between regulators and developers shapes outcomes. Collaborative approaches prove more effective than adversarial relationships. Mutual understanding produces workable solutions.

Ensuring Safety Without Surrendering Identity

Security and privacy aren’t inherently opposed. Thoughtful design achieves both objectives. Privacy-preserving technologies enable legitimate safety applications. The choice isn’t between total surveillance or complete vulnerability.

Proportional responses address genuine threats. Targeted approaches outperform mass surveillance. Effectivenessdoesn’t require ubiquitous identification. Smart security respects human dignity.

The future depends on choices made today. We can have facial recognition that respects biometric data through proper GDPR compliance, robust privacy controls, and responsible CCTV analytics—or we can surrender autonomy to unchecked technological expansion. The decision isn’t technical. It’s fundamentally about what kind of society we want to become.